Adelheid: A Distributed Lemmatizer for Historical Dutch

SummaryWith this web-application an end user can have historical Dutch texts tokenized, lemmatized and part-of-speech tagged, using the most appropriate resources (such as lexica) for the text in question. For each specific text, the user can select the best resources from those available in CLARIN, wherever they might reside, and where necessary supplemented by own lexica.

Background

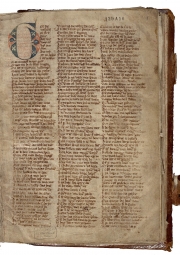

Research on transcribed manuscripts from the early centuries of the use of the Dutch language, say up to the middle of the 19th century, is seriously hampered by a lack of standardized orthography. As an example, in the corpus Van Reenen-Mulder, the 1055 occurrences of the lemma ‘penning’ (which would be important for the last of the research questions) show 56 different spellings, all of which a researcher would have to guess in order to find the corresponding text passages. The accessibility of the material is enhanced enormously by adding annotation layers showing normalized representations for word boundaries, lemmas and preferably also part-of-speech tags. A fully manual annotation is not only expensive, but also runs the risk of deviations from the agreed upon normalizations, e.g. using the frequent form ‘penninc’ as lemma instead of ‘penning’. Any annotation should therefore in principle be done semi-automatically, with an automatic system providing the most likely options and a human specialist providing further disambiguation only when deemed necessary.

The Adelheid web-application calls upon web-services performing tokenization, tagging and lemmatization. An input text is first split into tokens by a tokenizing component, then all possible lemma-tag combinations for each token are derived in a lexical component, after which the likelihood of each lemma-tag combination is estimated by a contextual component. Each of these three components accesses specific data resources and can be expected to work best if the resources are tuned to the text’s domain and time period. The tokenizing and contextual component will be atomic web-services. The lexical component, however, will be a web-service which allows for a selection of compatible lexical resources, in the form of lexica relating specific word forms to lemma-tag combinations that might underlie them, each possibly with a numerical indication of its relative likelihood. The main lexica for the demonstrator scenario, tuned for 14th century charters, have been created by Hans van Halteren on the basis of the Corpus van Reenen-Mulder, basing the likelihood indication on counts in the corpus and statistics on spelling variation. It’s also possible for the end user to provide an own lexicon, e.g. concerning lists of specific terms or proper names, but this will have to conform to the format of the current lexicons. Metadata describing the format and provenance of the lexicons will be created in IMDI/CMDI MD formats.

- Project leader: Dr. Hans van Halteren (Radboud University)

- CLARIN center: Max Planck Institute for Psycholinguistics (MPI)

- Help contact : http://tla.mpi.nl/contact (service)

- Web-sites: http://adelheid.ruhosting.nl/

- User scenario's (screencasts, screenshots): http://adelheid.ruhosting.nl/download/Adelheid1_DemoScenario.pdf

- Manual:

- Tool/Service link:

- Tagger-Lemmatizer: http://hdl.handle.net/1839/00-SERV-0000-0000-0006-4

- Visualisation tool: http://hdl.handle.net/1839/00-SERV-0000-0000-0009-D

- Publications: n.a.